Bands have been known to declare “No Synths!” on their albums. This statement was a badge of pride indicating that the artists hadn’t used any modern trickery in their recordings.

Today, the growing use of artificial intelligence (AI) and large language models (LLMs) in science has created a similar scenario. Advocates argue that these tools improve every aspect of science, including the publication process. Meanwhile, “AI refuseniks” have emerged who steadfastly avoid these tools.

Our most recent paper and associated 6000 lines of code were entirely written by my co-author and myself. No synths! But will it be our last? This post takes a look at the use of AI and LLMs in the scientific publication process.

ChatGPT one year on

At the time of writing, ChatGPT, the popular LLM text generator, has been available for one year. It was predictable that tools like this would be used in science and academia. From students cheating on essays or generating word-perfect emails asking for PhD supervision, to seasoned academics wanting to cut corners when reviewing papers and grant applications; there has been no shortage of controversy.

At the same time, there is a large “responsible use” faction advocating for the use of these tools in scientific publications (more background here). Is the use of AI and LLMs in science inevitable?

AI Refuseniks

For most of this year, I have classified myself as an AI refusenik. The thought that I would use LLMs for writing a scientific paper was and still is, preposterous. Writing is thinking. Formulating ideas, engaging deeply with the data, and immersing myself in the field. These are key parts of writing a paper. The process spurns new thoughts and theories that form our future research. Writing a paper is valuable and a process that I enjoy. It is not one that I could outsource to an LLM.

The proponents argue that using this technology is no different that a grammar checker and that routine aspects of the writing process can be efficiently handed off to an LLM. Refuseniks counter that if you didn’t write it, why should anyone read it.

I see a parallel here with the introduction of synthesisers into rock music.

No Synths!

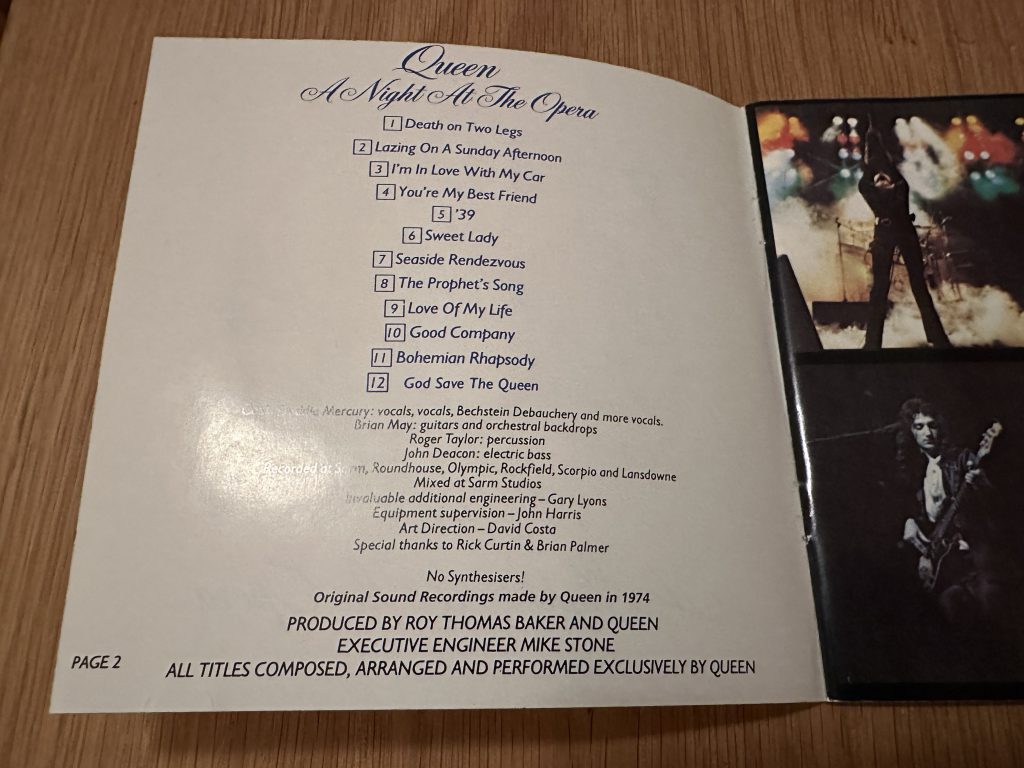

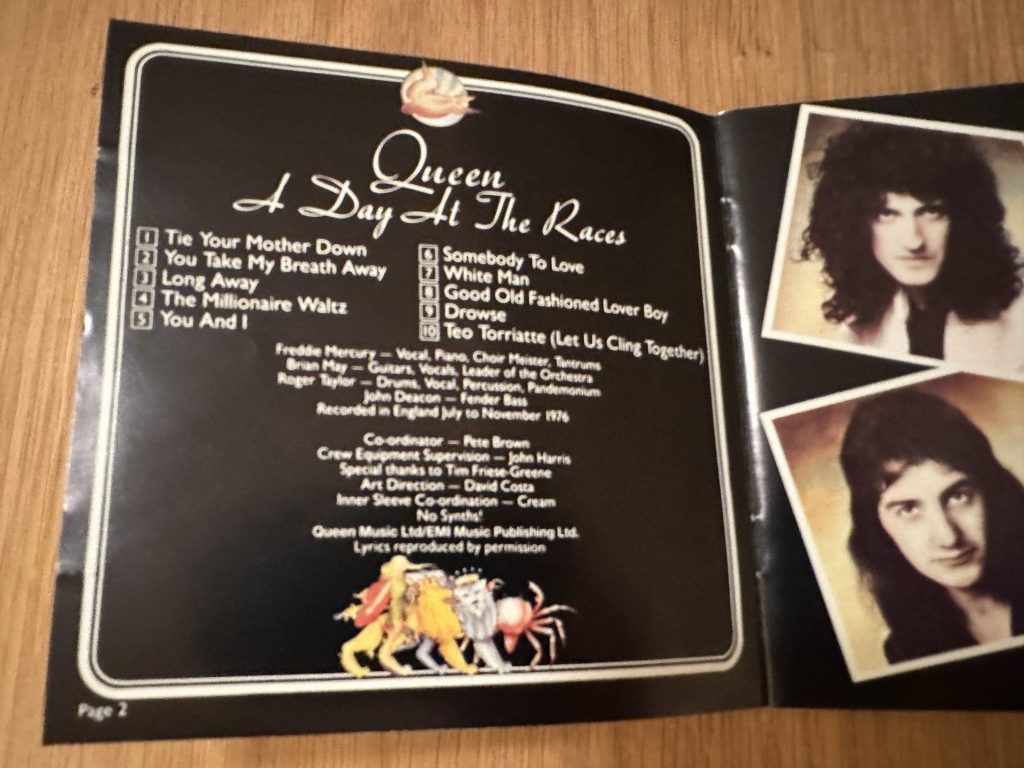

Queen famously proclaimed “No Synths!” on several albums in the 1970s. The roots of this declaration came from their annoyance at critics mistakenly suggesting that Brian May’s guitar sound was generated via synth. It also reflected the fact that while Queen happily used multi-tracking, varispeed and all kinds of studio tricks; the instrumentation on the albums was vocal, guitar, bass, drums and… no synthesisers. They were against the use of synthesisers because they felt that the sounds available with technology at the time were not good enough to use on a Queen album. Other groups of the era happily adopted the technology, for example “On The Run” from Pink Floyd’s The Dark Side of The Moon.

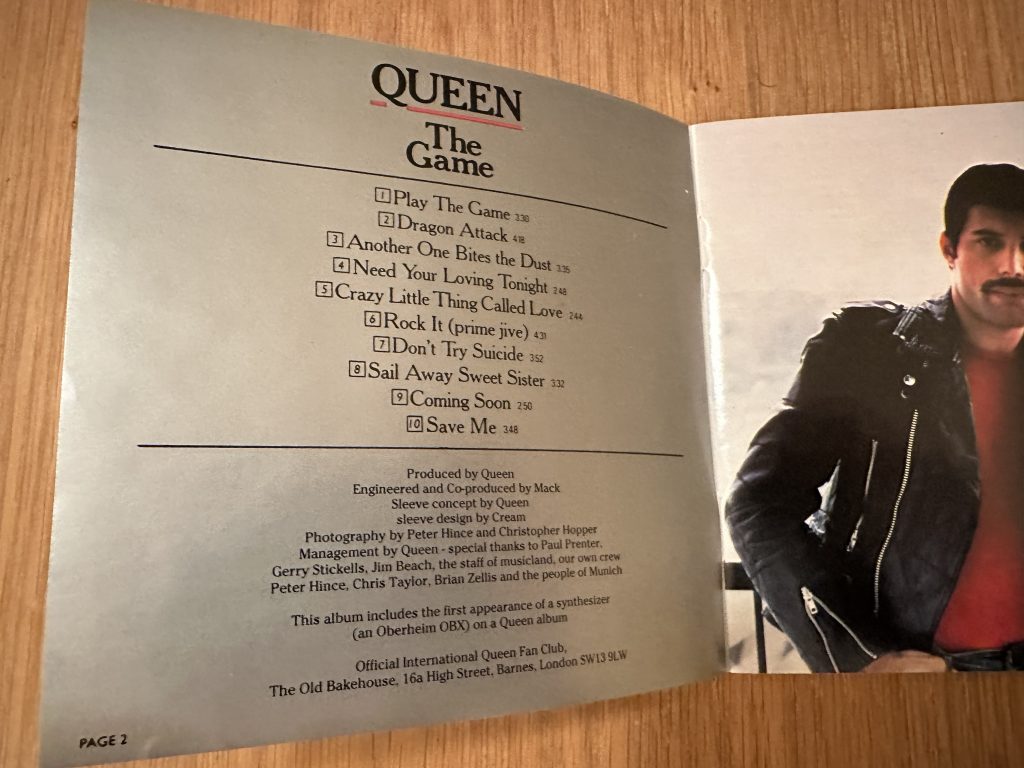

The “No Synths!” statement was in place for a string of Queen’s album releases. However, on “The Game”, released in 1980, the liner notes stated “This album includes the first use of a synthesizer (Oberheim OBX) on a Queen album”.

Possibly in homage to Queen, Rage Against The Machine, on their debut album, proclaimed: no samples, keyboards or synthesizers used in the making of this record. Many other artists have been proudly anti-synth. For example Iron Maiden used a “close to live” sound on their records, and famously told a fan “you can’t make heavy metal with synthesisers”, only to fold later and make “Somewhere In Time” in 1987 replete with synth-sounds.

From synths to AI, is the use of technology inevitable?

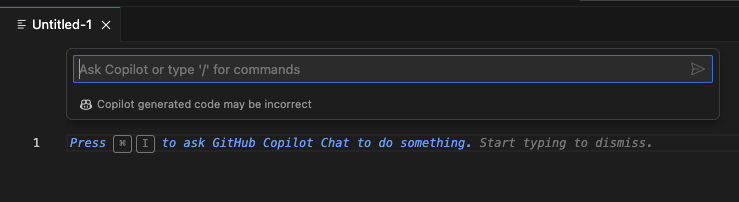

While I am against the use of LLMs in scientific publication, perhaps a turning point for me is Copilot. This is the LLM tool from GitHub that assists coding. Controversially fed by millions of lines of code on GitHub, Copilot can rewrite scripts between languages, generate whole routines, or simply just comment and/or correct your own bad code.

At first I was a Copilot refusenik too. For the same reasons around scientific writing, I thought: what serious coder would use this technology? Then, a professional programmer friend told me that everyone at his company were using it, and I thought I should reconsider. Next, a colleague told me that we could access Copilot via GitHub Education and they were also similarly enthusiastic. So I gave it a try.

In short, it’s a time saver. And a time waster. Learning how to use it efficiently is crucial.

I won’t do a full write-up here. Suffice to say that the biggest time saver is using it to generate short pieces of code (even one-liners) that you would normally look up on StackOverflow. The biggest time waster is, well, code that is incorrect.

With an understanding of how Copilot can help, it does speed up the process of writing code. Is it plagiarism? It’s a grey area. Is it plagiarism to copy-and-paste from StackOverflow?

Could we declare “No LLMs” or “No AI” on our next paper?

Maybe we should. I have no plans to start using LLMs to do any writing of the manuscript. So I still see myself as a conscientious AI objector. On the other hand, some of our code is now generated with LLM assistance. It’s a small fraction but it’s there. So declaring “No AI!” would not be truthful.

Like Queen and others that declared “No Synths!”, taking a public stand on the AI issue may be a position that doesn’t age well.

Even without this change, how AI-free was our previous work? Everything in our last paper was written by myself and my co-author with no suggested text by an LLM. There again, we used machine learning approaches to some of the image segmentation; and technically, dimension reduction methods that we use for analysis e.g. PCA, are a form of machine learning; despite being 120 years old. So it could be argued that the future is already here and wider adoption of these tools is inevitable.

The controversy over the use of AI and LLMs in science and in scientific publications continues. It will be interesting to revisit this topic in another year’s time and see where the consensus lies.

—

The post title is explained in the post.

I assume you have no problem with scientific publications being copyedited. It is unclear whether your position against using LLMs in writing scientific publications applies to copyediting. If a publisher uses a copyediting process involving software that uses an LLM, would you object or remove the “no LLMs” declaration? Is it important to you that _YOU_ did not use an LLM or that the final written output did not rely on an LLM?

BTW, this comment is partially written with the help of Grammarly, which might or might not have used an LLM. Does it make any difference whether you want to read this comment?

Thanks for the comment! I hadn’t considered this but in answer to your question: what’s important (to me) is that I didn’t use an LLM. I’m not someone who thinks that my written work is beyond improvement and have certainly benefitted from good copyediting in the past (as well as the opposite experience!). If technology is used to assist the person doing the copyediting, I would be OK with that. Unless the meaning is changed or something.

The dubious uses of LLM, such as generating text with “thoughts” not of the human author, are obscuring a far more mundane and reasonable use of LLMs in scientific and technical writing, which is copyediting with an LLM. For example, yesterday, I used https://copyaid.it to improve this wordy sentence:

https://gitlab.com/castedo/copyblast/-/blob/main/inputs/wordy/quick_start.md

to this improved sentence:

https://gitlab.com/castedo/copyblast/-/blob/main/expect/rev/wordy/0/quick_start.md

I have no problem with authors demonstrating that they write so well they don’t need an LLM. But for folks like me, readers are better off having my writing copyedited with an LLM. My writing is to communicate meaning, not demonstrate my writing skills, which I do not have and make no claim to have.

This comment does not demonstrate my writing skill; it has been improved by Grammarly (which seems to be using an LLM at times).

I agree with you that the issue is not so clear cut, i.e. all LLMs are bad or alternatively, let’s just use this technology for everything. There are mundane uses that can accelerate science or improve communication. This was the theme of the post really. Declaring “No Synths!” and banning technology can look a bit foolish when authors start to adopt the use of LLMs in some form or other.